WOMAD

Womad stands for World of Music, Arts and Dance, an international music and arts foundation known primarily for its festivals, held annually in multiple locations around the world.

“You never know where interesting news may come from” is the concept I have learned throughout my years.

To confirm it I will tell you that the first time I found information about Womad I was reading with my son from his English school book.

We owe the idea of this music art and dance festival to Peter Gabriel.

In 1982 the author of the immortal 7/4 song with that harmonic theme that brought everyone’s thoughts on that perfect green to the sky, together with a group of people started the first festival right in Somerset, in Shepton Mallet.

Later the project evolved into a mission: to create opportunities for cultural exchange and learning to bring the arts of different cultures to the widest possible audience by developing arts education and creative learning projects.

After all, Peter Gabriel’s spirit of contamination appeared to us loud and clear in the blending of tribal percussion and electro-synth sounds in Shock the monkey.

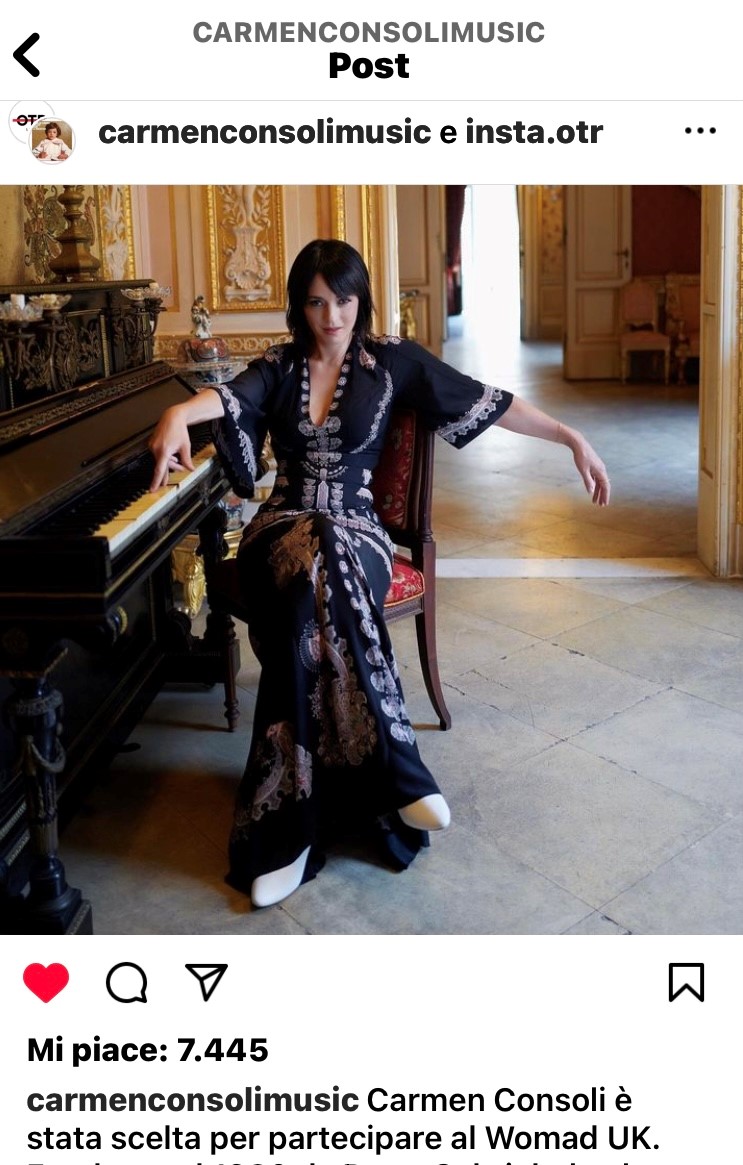

The resounding aspect of the Womad festival that is being held these days is that Carmen Consoli announced her participation with this picture.

The Singeress.

I would say she is perfect to represent the strength of roots and the richness of collaborations.

Carmen is the first Italian artist to perform at the world music festival.

July 27 / 30 – Charlton Park are the coordinates of Womad 2023.

While waiting, we can watch highlights from last year’s edition: the 40th.

Hi I'm Claudia and this is KCDC.

Hi I'm Claudia and this is KCDC.

OPINIONI